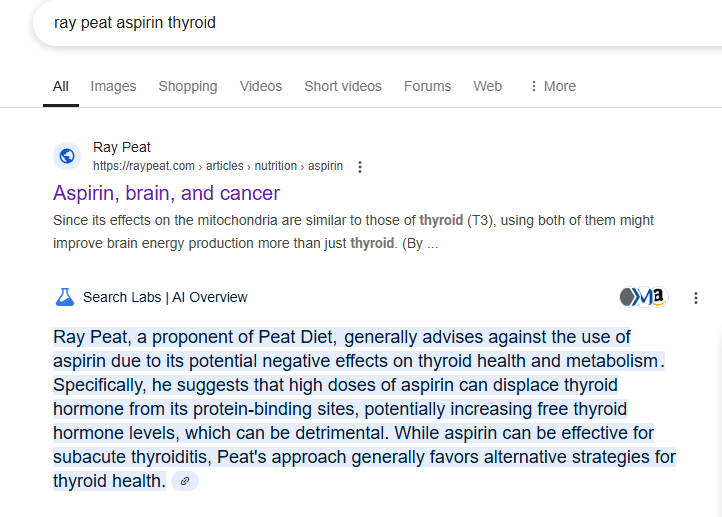

Google AI search results straight up lying about Ray Peat. Just outright fabrication. Unbelievable!

-

image url)

image url)A proponent of the Peat diet?! Aspirin bad for thyroid and advised against using?!?!

This doesn't feel like the typical AI hallucination. I've never seen a hallucination this bad. That looks like it's been tuned to smear Ray Peat and give misinformation about his work.

I wonder if big pharma execs put in the call?

-

This post is deleted! -

@Hearthfire said in Google AI search results straight up lying about Ray Peat. Just outright fabrication. Unbelievable!:

This doesn't feel like the typical AI hallucination.

PeatBot will do it too depending on how you prompt it. You can steer it toward or away from bradsters RAG.

-

At best AI is too “dumb” or one dimensional to understand the truth of Peat.

At worst it’s been programmed to lie to keep us enslaved….

Resist

-

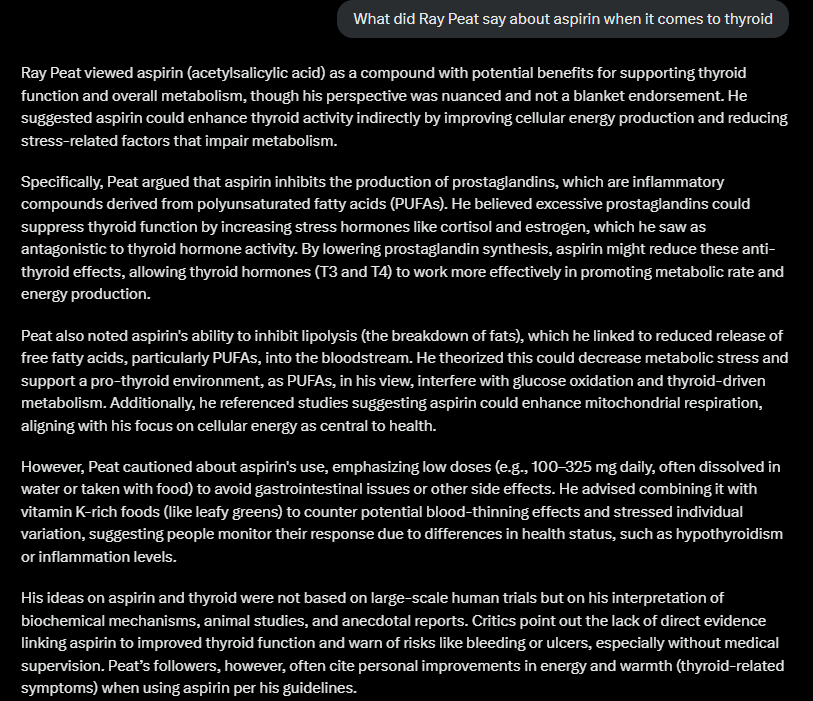

AI is getting frighteningly good at tasks and understanding context, and can give summaries of the ideas and work of known people very well. I went and prompted Grok to compare it to the google result, you be the judge:

That makes me think, they must have tuned the Google result to be anti-Peat. I mean, most mainstream opinions on him are negative. The Grok result got it right, but still mentioned the mainstream criticism. It wouldn't be that surprising they purposely tuned it to smear him. It is scary though. The same people who said trust the experts, wear masks, take the vaccine and don't question it, are possibly smearing a guy whose body of work and knowledge is legit....It can only mean one thing. They DON'T want people to be healthy. I see the signs of that everywhere I look. These controlling institutions are trying to keep people sick, not just keep them sick, make them sicker!

-

"ray peat aspirin thyroid" and "What did Ray Peat say about" are two very different things when it comes token prediction. Apart from the prompt, the Gemini vs Grok result looks more like a labelling/curation or class/reward failure than a specific attempt at malice.

Google is making a mistake by attempting to integrate this with traditional search in my opinion but they really have no other way to push it and they need interaction to mine for improvement. xAI has Twitter, closedAI has the public memory for popularising it. People are probably more familiar with DeepSeek than Gemini for the competitive coverage.

Google and Apple remain concerning for attestation in their mobile operating systems and the push to have us all scanning digital papers.

-

-

@Hearthfire yea bro google does this SMH “democracy” “nonpartisan”

Bruh -

The Google AI summary doesn't pop up for longer search terms. I gave it the same Grok prompt I used and it won't summarize it.

Don't you find it strange, even with so little context given in the search term, it chose to say all the opposite things that Peat said, instead of what he actually said? I've mostly gotten correct results from that Google AI summary about everything else.

I think you're giving a company that has been repeatedly caught tampering with search results during elections (to the benefit of the Democrat side) a little too much slack.

Peat represents everything the left hates. Self agency. Thinking for yourself. Researching things on your own. Making your own choices about your health based on sound information. They despise these things and would strip away the right to exercise them if they could, like they tried to during Covid.

-

This post is deleted! -

The answer to the same prompt there's much better. The UI and inference process is more comparable to Grok's.

@Hearthfire said in Google AI search results straight up lying about Ray Peat. Just outright fabrication. Unbelievable!:

Don't you find it strange, even with so little context given in the search term, it chose to say all the opposite things that Peat said, instead of what he actually said? I've mostly gotten correct results from that Google AI summary about everything else.

Knowing how LLMs work, not particularly. What a whole human is doing when they're balancing sources and finding edges on the internet or elsewhere is remarkable. An LLM can't do that, yet.